Please select your location and preferred language where available.

Novel Multi-Level Coding and Architecture Enabling Fast Random Access for Flash Memory

September 10, 2024

Recent advancements in multi-level flash memory technology have led to its widespread application, driving a demand for higher performance. To address this demand, techniques such as dividing the word line (WL) and/or bit line have been explored. However, this approach of array partitioning leads to an increased die size. Moreover, multi-level flash memory inherently requires more read cycles than SLC (1bit/cell), which is a significant drawback.

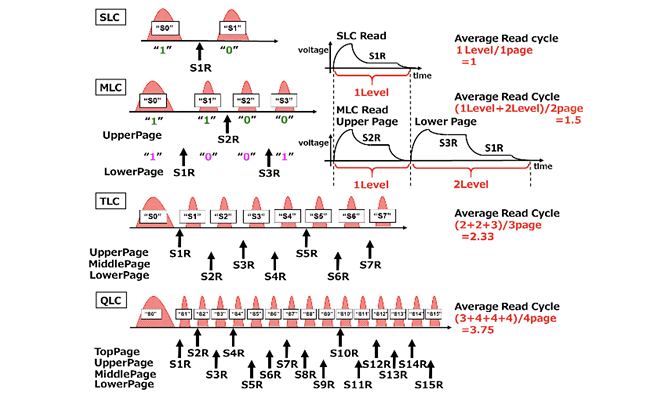

Figure 1 illustrates the voltage threshold (Vth) distributions and read operations for the WL waveform of both SLC and MLC.

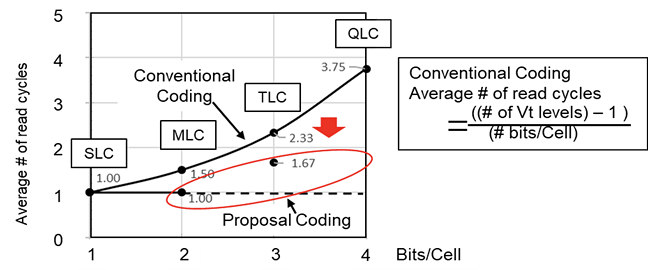

The SLC requires one reading level to distinguish between two levels, while MLC utilizes two levels for the Lower Page*1 and one level for the Upper, resulting in an average of 1.5(=(1+2)/2) read cycles. Additionally, the average read cycle for TLC is 2.33(=(2+2+3)/3) and for QLC is 3.75(=(3+4+4+4)/4). As the number of levels increases, so does the number of read cycles, leading to longer tR (Figure 2). To mitigate this inherent challenge, this study proposes a reduction in the number of read cycles by sharing and storing multi-level data in multiple memory cells[1].

*1 Page refers to the number of data read or written in one operation. Normally, 16kbytes (16k x 8bit) is one page. In MLC, 1 bit of 2 bits/cell is treated as Lower Page and the other 1 bit as Upper Page.

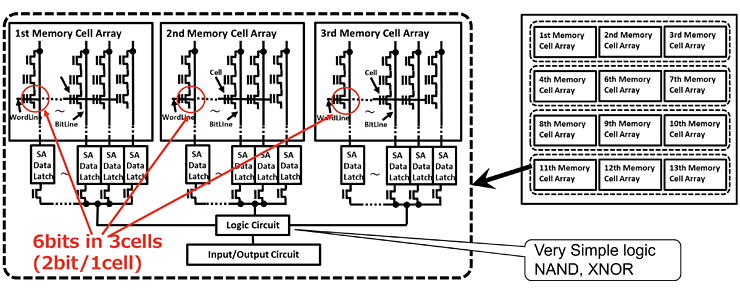

In MLC flash memory employing the proposed coding, three memory cells are combined, and 6 bits are stored in these 3 cells (Figure 3). The average number of read cycles is reduced from 1.5 (in conventional MLC coding) to 1 with the new coding—a 33% reduction.

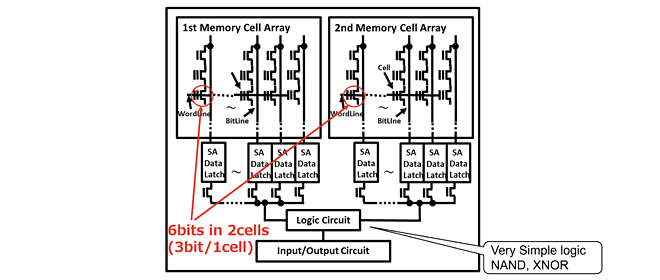

In TLC flash memory employing the proposed coding, two memory cells are combined, and 6 bits are stored in these 2cells (Figure 4). The average number of read cycles is reduced from 2.33 (in conventional MLC coding) to 1.67(=(1+1+2+2+2+2)/6) with the new coding—a 28.6% reduction. These approaches effectively reduce random access times without the need for introducing new processes or materials, and without changing memory storage density.

This research has been presented at the IEEE International Memory Workshop (IMW) in May 2024.[1]

Reference

[1] N. Shibata et al., “Novel Multi-Level Coding and Architecture Enabling Fast Random Access for Flash Memory”, IMW2024, pp. 17-20, May.2024.