Please select your location and preferred language where available.

Development of Image Classification System Deploying Memory-Centric AI with High-capacity Storage

January 12, 2023

In deep learning, neural networks can acquire various knowledge and abilities through a training process, in which the parameters of the neural networks are tuned. On the other hand, if a user obtains new data (e.g., new images) after the training is completed and wants the neural network to acquire additional knowledge about that data, re-training is required. Re-training from scratch is costly in terms of time and power consumption. Therefore, a method of re-training with only a part of the data including new data, could be considered, but catastrophic forgetting problem, in which the knowledge and abilities learned in the past are lost, is inevitable.

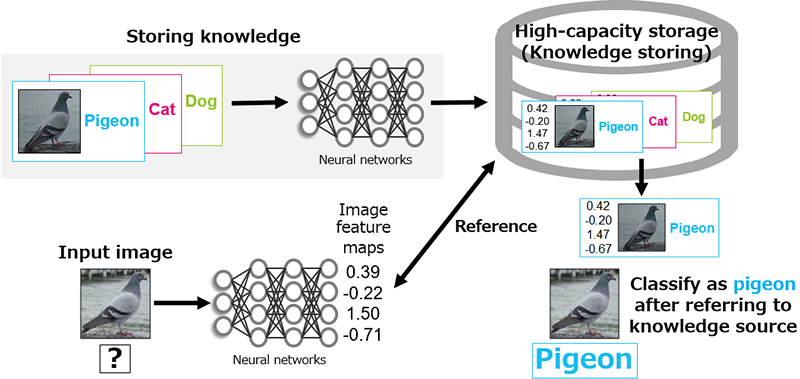

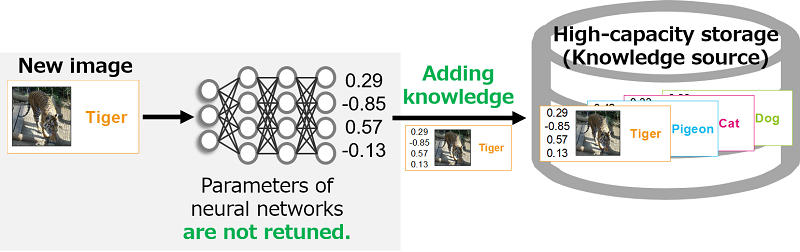

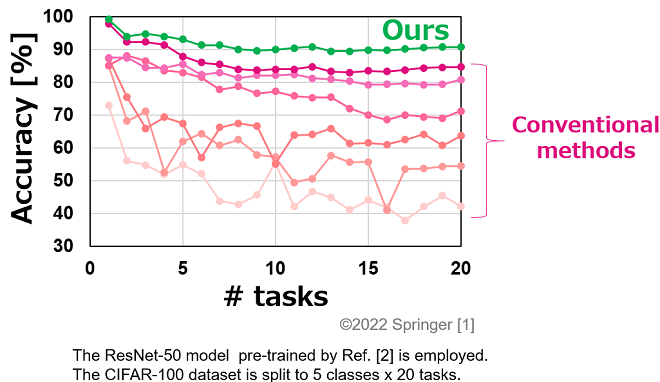

We have addressed catastrophic forgetting problem in image classification tasks with an AI technology utilizing high-capacity storage (Memory-Centric AI). We devised a system that stores large amounts of image data, labels, and image feature maps*1 as knowledge in the high-capacity storage, and that refers to the storage during image classification (Fig.1). In our system, when a user obtains new images, knowledge can be added or updated by storing additional image feature maps and labels without re-training or retuning parameters (Fig.2). Since the parameters of the neural network are not retuned at all, the catastrophic forgetting problem can be avoided. The knowledge can be added or updated while achieving better accuracy than those of conventional methods (Fig.3).

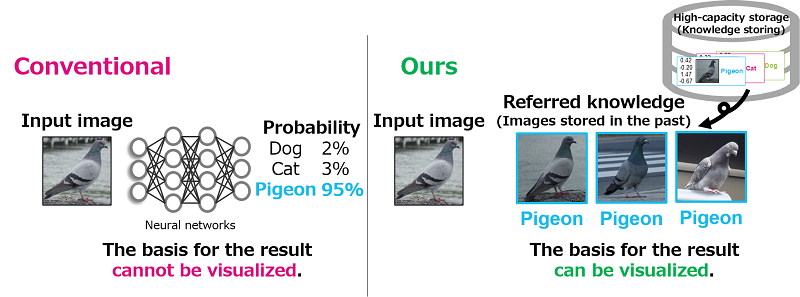

In addition, our system is expected to improve the explainability of AI*2 and alleviate the black box problem*3. In the conventional methods, when images are classified by neural networks, the process and basis for the results cannot be interpreted by humans. On the other hand, our system can visualize the basis for the classification results by listing the data referred to in the storage when classifying images (Fig.4). Furthermore, by analyzing the referred data, the contribution of each stored data can be evaluated according to the frequency of references.

- Image feature maps: multidimensional (e.g., 1,024-dimensional) numerical data obtained through neural network operations.

- Explainability of AI: possibly of explaining the basis and reasons of results predicted by AI in a way that can be interpreted by humans.

- Black-box problem: the process leading to the results predicted by AI is not interpretable to humans, making it a black box problem.

We presented this result at European Conference on Computer Vision 2022 (ECCV 2022), one of the top conferences in the field of computer vision[1].

This material is a partial excerpt and a reconstruction of Ref. [1]©2022 Springer.

Company names, product names, and service names may be trademarks of third-party companies.

Reference

[1] K. Nakata, Y. Ng, D. Miyashita, A. Maki, YC. Lin, J. Deguchi, “Revisiting a kNN-Based Image Classification System with High-Capacity Storage,” Computer Vision - ECCV, pp. 457-474, 2022.

[2] A. Radford, et al, “Learning Transferable Visual Models From Natural Language Supervision,” ICML, pp. 8748-8763, 2021.